There’s no question Hurricane Andrew was a wake-up call for the industry. The event paved the way for catastrophe models to revolutionize the way (re)insurers quantify, price, transfer, and manage catastrophe risk. Prior to the adoption of catastrophe models, (re)insurers used rough rules of thumb based on premiums to estimate potential losses. Andrew caused losses many times higher than what these formulas had projected.

The catastrophe models got it right because of their unique structure and architecture. The models provide the infrastructure for starting with an event, calculating the location-level intensities caused by that event, estimating the damage based on the replacement values of exposed properties (not premiums), and finally determining the ultimate financial loss.

Catastrophe modeling evolved to a global standard technology used by (re)insurers around the world. For every type of peril — hurricanes, wildfires, earthquakes — and for every country and region, models have these same four primary components.

Most importantly, catastrophe models are built around large stochastic event sets representing the probabilities of events of different sizes and severities by location. This enables the most valuable output of a catastrophe model — the Exceedance Probability (EP) curve — which provides the probabilities of losses of different sizes on a particular portfolio of properties.

For example, rather than simply estimating that a Category 5 hurricane making landfall near Miami, would result in an insured loss of $150 billion, the hurricane models assign a probability to that $150 billion loss. Today, the probability of that size hurricane loss in the United States is about 1%, in other words, a one in 100-year loss (on average). The models estimate the probabilities of losses of all sizes from hurricanes striking everywhere along the coastline.

Essentially, the models provide a complete view of the loss potential for the industry as a whole and for the specific portfolios of individual insurers. Armed with this information and fully probabilistic models, insurers can make more informed decisions — on how much reinsurance to buy, on risk-based premiums for their own policyholders, and on underwriting guidelines.

As the science has evolved and advanced over the years, and actual events have provided additional data for model validation, the model components have become more complex and able to account for more variables and event characteristics. Additionally, advances in computing power have enabled the models to capture and utilize more data and at much higher resolution than the original models. But the fundamental structure of the models has remained the same, demonstrating the robustness of this powerful technology.

Recent Advances

Recent advances in modeling technology include accounting for the impacts of climate change. The catastrophe models have been based on extrapolations of historical data under the assumption that history provides a good guide to the future. For example, the hurricane models are based on data going back to 1900. Until recently, this was a reasonable assumption. Today, however, given that climate change is known to be impacting weather-related events, catastrophe models must go beyond the data in the historical record.

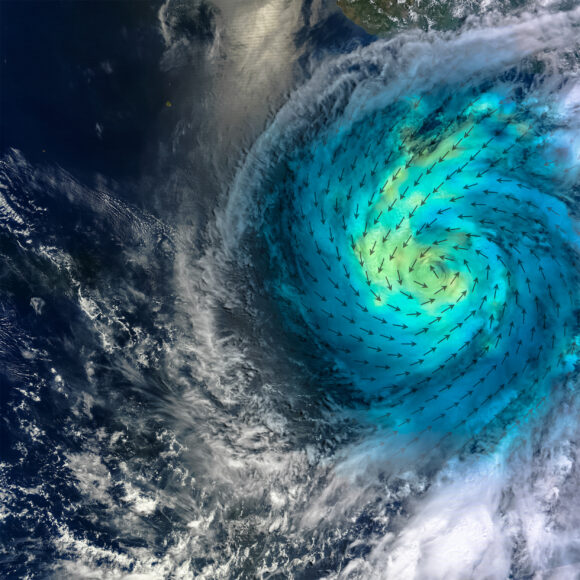

The current scientific consensus is that climate change is already impacting hurricanes, floods, winter storms and wildfires with medium to high confidence. With respect to hurricanes, global temperature increases are leading to more intense hurricanes and there’s an ongoing shift to a higher proportion of major Category 3-5 hurricanes. Recent hurricane activity has provided this evidence. But how can this be incorporated into models that rely on data going back 100 years? Essentially, the historical data must be adjusted to reflect current climate conditions.

Recent studies conducted by KCC scientists and other climate experts have concluded that a one degree Celsius temperature change results in a 2.5% increase in hurricane wind speeds. Since 1900, there has been a global temperature increase of 1.1 degree, resulting in a 2.75% increase in wind speeds. While 2.75% increase may not sound like a lot, losses increase exponentially with wind speeds. According to analyses conducted by KCC scientists, insured losses are 11% higher today than they would have been if global temperatures had remained constant.

Perhaps more importantly, KCC analyses demonstrate the EP curves are not increasing uniformly across all return periods; rather climate change is altering the shape of the curve. The lower return periods — the one in five, 10 and 20-year losses, are rising faster than the one in 100-year and more extreme losses.

The only landfall locations that can generate an extreme $150 billion hurricane loss are Miami, Galveston/Houston, and the Northeast. On the other hand, more major hurricanes occurring all along the coast are resulting in more $10 billion, $20 billion and $30 billion insured losses. Losses of this size may not be solvency threatening for most insurers, but they can negatively impact annual financial results.

While we don’t know when the $150 billion loss will occur, based on the current climate, (re)insurers should expect a $10 billion hurricane loss every other year on average and a $20 billion loss at least every five years. A 10-year return period hurricane loss in the U.S. is now $40 billion. These numbers reflect current property replacement values, including COVID-driven cost increases, along with climate change.

Future Views

Traditionally, the models were designed to assess the current risk and not to project losses out into the future. With climate change impacting several weather-related perils, (re)insurers now require future views of risk.

To create these future views, scientists can rely on the projections in the latest Intergovernmental Panel on Climate Change (IPCC) assessment report — AR6. This report provides the current scientific consensus on projected global temperature increases under various emissions scenarios going out to the year 2100. Catastrophe modelers use these projections to develop climate-conditioned catalogs (re)insurers use to see how their EP curves may change under the different scenarios and time periods.

Future advances in modeling technology include faster and more frequent model updates. The speed at which environmental factors are changing means the models must be updated more frequently than they’ve been in the past. Model updates have not always been welcomed by (re)insurers because the numbers can change — sometimes dramatically — without sufficient explanation or justification. It behooves the modeling companies to make the model update process more efficient and transparent for (re)insurers.

While catastrophe models existed before Hurricane Andrew, it was this event that led to widespread industry adoption of this powerful technology. The fundamental structure of catastrophe models has not changed, but the models have advanced and evolved to incorporate more variables, run at much higher resolution, and most recently, incorporate the impacts of the changing environment, i.e., climate change. The models remain essential tools for (re)insurers to quantify, price and manage extreme event risk.

Clark is co-founder and CEO of Boston-based Karen Clark & Co., a catastrophe modeling company established in 2007 to help insurers better manage catastrophe risk. In 1987, she founded the first catastrophe modeling company, Applied Insurance Research, which became AIR Worldwide when it was acquired in 2002 by Insurance Services Office Inc.

Was this article valuable?

Here are more articles you may enjoy.

Zurich Insurance and Beazley Agree to $10.9B Cash Acquisition

Zurich Insurance and Beazley Agree to $10.9B Cash Acquisition  Marsh Awarded Injunction Against Former Employees Now With Howden US

Marsh Awarded Injunction Against Former Employees Now With Howden US  Commercial P/C Market Softest Since 2017, Says CIAB

Commercial P/C Market Softest Since 2017, Says CIAB  What Berkshire’s CEO Abel Said About Insurance

What Berkshire’s CEO Abel Said About Insurance