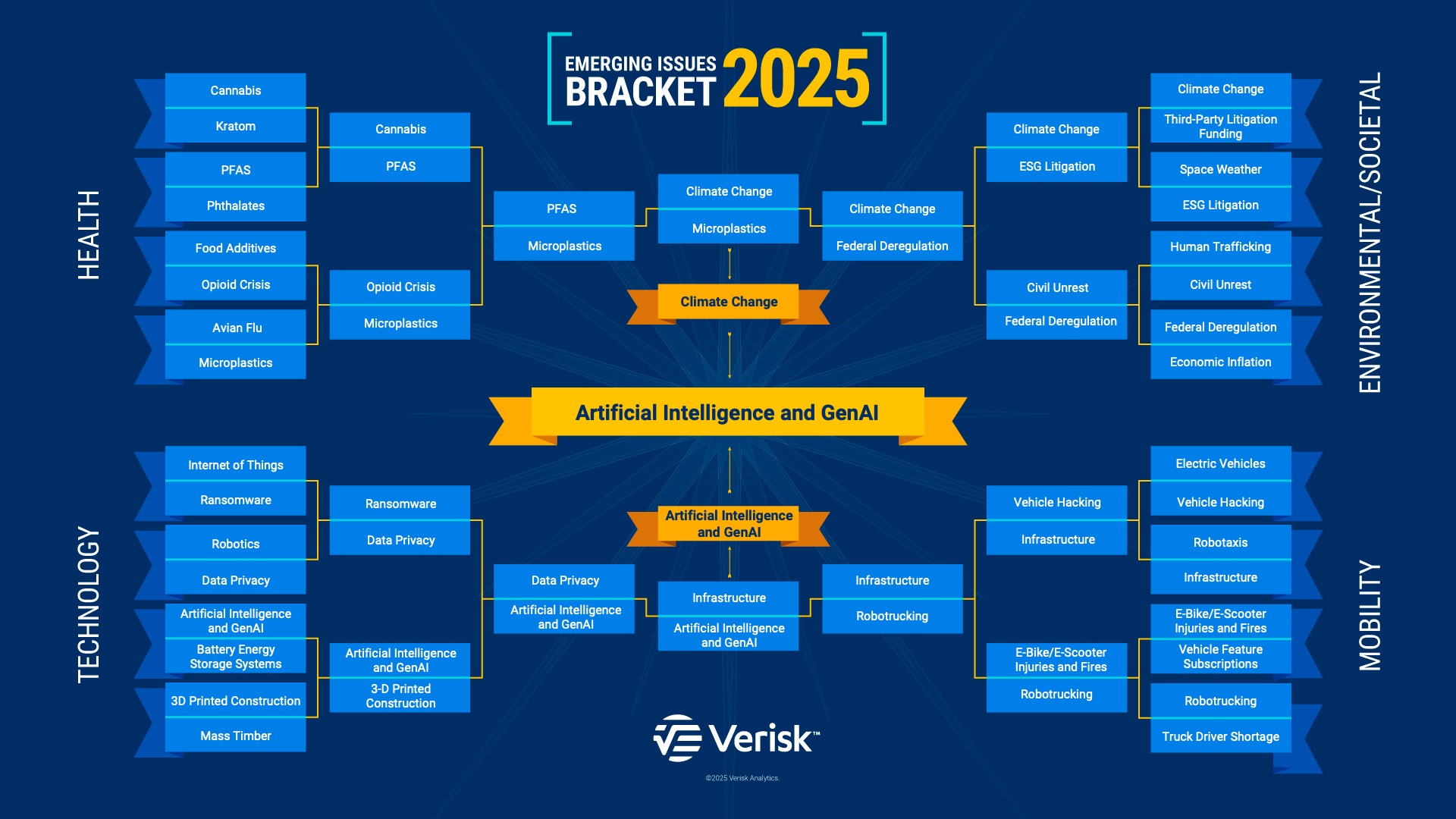

Collegiate teams that competed in the men’s and women’s basketball tournaments turned heads in March. Now, we’re hearing about topics that made it to the final rounds of Verisk’s recent emerging issues bracket that could hold the collective attention of property/casualty insurance professionals for years to come.

The global data analytics provider hosted an hour-long webinar on June 10 highlighting the top issues voted on by industry experts. Though climate change, microplastics, and infrastructure made it to the “Final Four,” artificial intelligence and Gen AI repeated as the top issue. As the tech evolves and states eye AI-related legislation, edge cases and AI hallucinations continue to pose risks.

AI Proliferation Prompts Legislation

AI’s technological strides have prompted action from state legislatures across the U.S. to respond to potential risks that are still not well understood.

Policymakers have undertaken a “pretty swift response” to promulgating targeted AI laws and regulations. This year alone, most states have introduced some form of AI regulation—but only a handful have signed that legislation into law, said Laura Panesso, associate vice president of government relations at Verisk said during the webinar, which is available on demand on the Verisk website.

“This trend is indicative of a growing desire to try to regulate a technology that’s really evolving at a speed that we can barely keep up with,” Panesso said.

The National Association of Insurance Commissioners developed a bulletin that sets expectations for how insurers should govern the development, acquisition and use of AI in decision-making and encourages the creation and use of testing methods to identify bias and discrimination. Twenty-four jurisdictions have adopted this bulletin, while California, Colorado and New York have also released separate, state-specific AI regulations and bulletins.

However, without a federal framework for AI regulation or development, Panesso said states are forging their own paths in managing the growing challenges posed by the emerging tech. Forty states have introduced or enacted some form of AI legislation or regulation, which ranges from studying the impacts of AI to managing aspects of the technology.

Major themes noted in the Verisk webinar include deployer requirements, ownership rights regarding information used in training models or outputs and algorithmic pricing and discrimination. On the generative AI front, there’s been a push for regulation of deep fakes and intimate image creation, and states are also beginning to set parameters for the use of generative AI systems, Panesso said.

Utah Senate Bill 26, for example, was signed into law this session. It enacts provisions related to the use of generative artificial intelligence in consumer interactions. The law requires disclosures when gen AI is used, establishes liability for violations of consumer protection laws and provides a safe harbor for certain disclosures.

Edge Cases and Gen AI Hallucinations

The focus has been on how powerful and innovative AI is, but the technology is not perfect and can go off the rails. Greg Scoblete, a principal on Verisk’s emerging issues team, pointed to two specific areas: edge cases and statistics.

He talked about how edge cases—instances in which there isn’t much relevant data on a particular scenario available in the data set used to build a specific AI model—appear to be perfect candidates of an AI system’s potential to cause damage or hurt someone.

AI and machine learning techniques support numerous automotive features that improve safety and efficiency, and as vehicles’ autonomous capabilities grow, the use of this technology is only expected to grow. But those advances also pose downsides, Scoblete explained.

“We are already seeing reports of edge case-style errors leading to incidents and mishaps,” he said. In the United Kingdom, for example, a luxury automobile with adaptive cruise control reportedly accelerated twice to over 100 mph in a 30-mph zone—reportedly because its object recognition system encountered a roadway marking it couldn’t interpret and interpreted it as a command to accelerate the vehicle, Scoblete added.

In the U.S., a vehicle with adaptive cruise control hit the top of an overturned truck on a highway, and a federal investigation into that accident suggested it occurred, in part, because the top of a truck was not something its AI image recognition system was trained to recognize. The top of the truck represents an edge case: it’s not something one would expect to see on a highway, unlike traffic lane markers and the backs of cars.

“The difference between AI and a person, at least today, is that while you and I may have never seen the top of a truck either, we would know immediately and instinctively that if one of them was in front of us on the highway, the last thing we would do is drive into it,” Scoblete said. “With AI, at least today, we can’t be sure how it will react when it encounters an edge case.”

In addition to edge cases, Scoblete said generative AI output errors can also afflict vehicles. These hallucinations aren’t driven as much by data as they are by how a model arrives at its output, he added. Often, those predictions are correct—but not always.

To date, at least 121 lawyers or litigants have filed legal briefs with errors attributed to a generative AI program. Scoblete said this reflects what a pair of Stanford University studies confirm: Generative AI may generate a significant amount of erroneous outputs when dealing with legal subject matter.

“We have to ask, do we think that the legal profession is the only professional services industry where accuracy and rigor in their work product are important?” Scoblete said. “Do you think they’re the only professional services industry that might be under pressure to enhance their efficiency with generative AI tools?”

Also, according to a data set maintained by George Washington University, at least 11 generative AI product liability lawsuits have been filed to date. There appears to be a question in some legal circles, Scoblete said, of whether the product liability tort law regime that’s been developed for physical products will be applied to virtual AI products at all.

“One of the issues here is the nature of the injury,” he said. “As AI embeds itself into more and more physical products like cars, its capacity to cause property damage or injury may grow.”

This article was originally published by Claims Journal

Was this article valuable?

Here are more articles you may enjoy.

Insurance Broker Stocks Sink as AI App Sparks Disruption Fears

Insurance Broker Stocks Sink as AI App Sparks Disruption Fears  Preparing for an AI Native Future

Preparing for an AI Native Future  Jury Finds Johnson & Johnson Liable for Cancer in Latest Talc Trial

Jury Finds Johnson & Johnson Liable for Cancer in Latest Talc Trial  US Supreme Court Rejects Trump’s Global Tariffs

US Supreme Court Rejects Trump’s Global Tariffs